Popup Window: Source Spectral Models:

Spatial Models: Related FTOOLS: Also see |

Likelihood Tutorial: Binned (v03)

This version of the Binned Likelihood Tutorial is for use with ScienceTools-09-20-00, and higher, which include gtexpcube2. It is strongly recommended that the old gtexpcube application not be used for Likelihood analysis any longer—unless your work requires that you use an older version. (See ScienceTools-09-20-00 New Feature Summary in confluence.)

Note: If you are continuing to use a prior version of ScienceTools refer to the now archived version 02 of the binned likelihood analysis.

Detection, flux determination, and spectral modeling of Fermi LAT sources is accomplished by a maximum likelihood optimization technique. This narrative gives a step-by-step description for performing a binned likelihood analysis.

Binned vs Unbinned Likelihood

(see also e.g. Abdo, A. A. et al. 2009, ApJS, 183, 46).

Unbinned likelihood analysis is the preferred method for spectral fitting of the LAT data. However, a binned analysis is also provided for cases where the unbinned analysis cannot be used. For example, the memory required for the likelihood calculation scales with number of photons and number of sources. This memory usage becomes excessive for long observations of complex regions, necessitating the use of a binned analysis. (For more detailed information, see the Likelihood Overview.)

Prerequisites

You will need:

- event data file (often referred to as the FT1 file)

- spacecraft data file (sometimes referred to as the FT2 file, also known as the livetime file)

- Background models (galactic diffuse emission model and extragalactic isotropic emission model)

Note: Instructions for obtaining the data used in these examples are provided in the appropriate steps, below.

Assumptions

The examples used in this exercise assume that you:

- are running in a bash shell on a SLAC Central Linux redhat5 64-bit machine.

[See Example: Setup (when running Science Tools on SLAC Central Linux).]

- are using an SCons optimized redhat5-x86_64-64bit-gcc41 build for

ScienceTools-09-20-00, or higher.

- have set up a directory for your data (e.g., binned_likelihood_data) in your User Work Space (and that you will run the science tools from within that directory).

Note: These files can be quite large, and you should not attempt to run them in your home directory.

- will use the data files provided (which are available for download).

Note: You can also select your own data; see the LAT Data Access (green navbar) section of this workbook.

Steps

| It is assumed that you are running on SLAC Central Linux; if not, the setup instructions can readily be adapted to your environment. | |

| Since there is computational overhead for each event associated with each diffuse component, it is useful to filter out any events that are not within the extraction region used for the analysis. | |

| These simple FITS images enable you to inspect your data and help to pick out obvious candidate sources. | |

When you use the latest Galactic diffuse emission model, in a likelihood analysis you should also use the corresponding model for the Extragalactic isotropic diffuse emission, which includes the residual cosmic-ray background. Save them in the same directory as your data. |

|

| The source model XML file contains the various sources and their model parameters to be fit using the gtlike tool. | |

The binned counts map is used to reduce computation requirements in regions with large numbers of events. |

|

| Precomputing the livetime for the dataset speeds up the exposure calculation. | |

The gtexpcube2 tool creates exposure maps that correspond to the counts maps generated above and are used in the likelihood analysis. The maps cover the same region of the sky as the counts maps and have separate planes for different energies spanning the energy range of the counts maps. Note: Available as of January 2011 in ScienceTools-09-20-00 and higher, gtexpcube2 replaces the "on-the-fly" binned exposure map calculation that was provided by gtsrcmaps, and it also replaces the gtexpcube application from the map_tools package. It is strongly recommended that the old gtexpcube application no longer be used for Likelihood analysis. (See ScienceTools-09-20-00 New Feature Summary in confluence.) Important! The exposure needs to be recalculated if the ROI, zenith angle, time, event class, or energy selections applied to the data are changed. For the binned analysis, this also includes the spatial and energy binning of the 3D counts map. |

|

| These are used as templates for the various source terms in the likelihood fitting performed by gtlike. | |

| Fitting the data to the model can give flux, errors, spectral index, and more. | |

| The model map can be compared to the counts map to verify the quality of the fit. | |

1. Setup

Be sure that the variation of ScienceTools you are using matches your operating system. The examples given in this exercise were running on a RHEL5-64 bit machine. If you are running on a SLAC Central Linux machine:

- Refer to the assumptions, above, then login to your home directory.

- If you have not already done so, create a directory (e.g., binned_likelihood_data) for your data in your User Work Space:

mkdir /afs/slac/g/glast/users/username/binned_likelihood_data

- Change to a redhat5 64-bit machine: ssh rhel5-64

- Setup your environment.

Tip: Use the emacs editor (available from the prompt by entering emacs) and create a setup script, e.g., setup_sciencetools-09-20-00. [See Example: Setup (when running Science Tools on SLAC Central Linux).]

Note: Since the data files can be very large, the example setup script assumes that you have already set up a data directory (e.g., likelihood_data) in your User Work Space where you will place your data (and from which you will run the science tools).

- Execute your setup script: source setup_sciencetools-09-20-00

- Change to your data directory: cd $My_Data

Note: All of the following exercises assume that you are running the science tools from within your data directory.

2. Get the Data and Make Subselections from the Event Data

- Get the Data. The original data selected for use in this analysis was:

- Search Center (RA, DEC) =(193.98, -5.82)

- Radius = 40 degrees

- Start Time (MET) = 239557417 seconds (2008-08-04 T15:43:37)

- Stop Time (MET) = 255398400 seconds (2008-02-04 T00:00:00)

- Minimum Energy = 100 MeV

- Maximum Energy = 100000 MeV

Important! The ROI used by the binned likelihood analysis is defined by the 3D counts map boundary. Region selection used in the data extraction step (which is conical) must fully contain the 3D counts map spatial boundary (which is square). Hence, a larger data selection is used for the binned likelihood (i.e., Radius = 40 degrees) than is used for the unbinned likelihood.

Note: For such a large region (40 deg selection radius), the zenith cut as applied using the gtmktime ROIcut can be very harsh, depending on where the source is in the sky. There is actually some argument to use a smaller field, and to make the cube a bit smaller as a general practice; however, this depends on the analysis desired. The following FT1 and FT2 files (which were used in this exercise) are provided below. (These are not the same files provided for the unbinned likelihood analysis; as indicated by the note above, the FT1 files are much larger.) You can download these files and place them in your work area, or you can obtain your own data [see the LAT Data Access (green navbar) section of this workbook].

- L100817204651E0D2F37E37_PH00.fits (338,590,080 KB, FT1 file)

- L100817204651E0D2F37E37_PH01.fits (240,350,400 KB, FT1 file)

- binned_spacecraft.fits (61,608,960 KB, FT2 file)

- Enter: cd $My_Data and place your data in this directory.

Note: For the purposes of this exercise, it is assumed that you will run all commands used in this exercise from this directory. If you logoff and want to resume work, your setup procedure is as follows:

- Login to your home directory.

- Enter the following command: ssh RHEL5-64

- Source your setup script: source setup_sciencetools-09-20-00

- Change to your data directory (e.g., cd $My_Data).

- Combine the FT1 events files. In order to combine the two events files for your analysis, you must first generate a text file listing the event data files to be included:

|

This text file (binned_events.txt) will be used in place of the input fits filename when running gtselect. (See gtselect Help and gtselect Parameters.)

- Run gtselect. Things to note:

- Selecting photons. When analyzing a point source, it is recommended that you include events with a high probability of being photons. To do this, use gtselect to perform cuts on the event class, keeping only event classes including and greater than (diffuse class).

For example: gtselect evclsmin=3 evclsmax=4

(evclsmin and evclsmax are hidden parameters, so to use them for a cut, you must type them in the command line.)- Selecting the ROI. For this example, the diffuse class photons within a 40 degree region of interest (ROI) centered on the blazar 3C 279 are selected.

|

Notes:

- The gtselect syntax requires that you use an @ before the binned_events.txt filename to indicate that this is a text file input rather than a fits file.

- We also selected the energy range and the maximum zenith angle value (105 degrees).

The Earth's limb is a strong source of background gamma rays, and we filter them out with a zenith-angle cut; the value of 105 degrees is the cut most commonly used.

Example: 3C279_binnedevents_filtered.fits (optional).

- Calculate exposure. After the data selection is made, you must correctly calculate the exposure. There are several options for calculating livetime depending on your observation type and science goals. For a detailed discussion of these options, see the Cicerone's Likelihood Livetime and Exposure.

Zenith angle and the Good Time Intervals (GTIs). To deal with the cut on zenith-angle in this analysis, we use the gtmktime tool to exclude the time intervals where the zenith cut intersects the ROI from the list of good time intervals (GTIs). (See gtmktime Help and gtmktime parameters.) This is especially needed if you are studying a narrow ROI (with a radius of less than 20 degrees), as your source of interest may come quite close to the Earth's limb. To make this correction you have to tell gtmktime to "Apply ROI-based zenith angle cut" by answering "yes" at the prompt. (See gtmktime example, below.)

gtmktime also provides an opportunity to select GTIs by filtering on information provided in the spacecraft file. (See the Cicerone's LAT File Types: Spacecraft File.) The current gtmktime filter expression recommended by the LAT team is:

DATA_QUAL==1 && LAT_CONFIG==1 && ABS(ROCK_ANGLE)<52.

gtmktime example:This excludes time periods when some spacecraft event has affected the quality of the data, ensures the LAT instrument was in normal science data-taking mode,and requires that the spacecraft be within the range of rocking angles used during nominal sky-survey observations.

The following is an example of running gtmktime for our analysis of the region surrounding 3C 279:

prompt> gtmktime |

Example: 3C279_binnedevents_gti.fits (optional)

- View DSS keywords. To view the DSS keywords in a given extension of a data file, use the gtvcut tool and review the data cuts on the EVENTS extension. (See gtvcut Help and gtvcut parameters.)

gtvcut:This provides a listing of the keywords reflecting each cut applied to the data file and their values, including the entire list of GTIs as shown in the example below:

prompt> gtvcut suppress_gtis=yes |

Note: This display includes the RA, Dec and ROI of the data selection, as well as: the energy range in MeV; the event class selection; the zenith angle cut applied; and it shows that the time cuts to be used in the exposure calculation are defined by the GTI table.

| Caution: Some of the Science Tools will not be able to run if you have multiple copies of the same DSS keyword. This can happen if the position used in extracting the data from the data server is different than the position used with gtselect. It is wise to review the keywords for duplicates before proceeding. If you do have keyword duplication, it is advisable to regenerate the data file with consistent cuts. |

3. Make Counts Maps from the Event Files

Identify candidate sources. To identify candidate sources and to ensure that the field looks sensible, create a counts map of the region-of-interest (ROI), summed over photon energies.

Use the gtbin tool with the "CMAP" option (see gtbin Help and gtbin Parameters):

gtbin with "CMAP" option:| prompt> gtbin This is gtbin version ScienceTools-09-20-00 Type of output file (CCUBE|CMAP|LC|PHA1|PHA2) [] CMAP Event data file name[] 3C279_binnedevents_gti.fits Output file name[] 3C279_binned_counts_map.fits Spacecraft data file name[] NONE Size of the X axis in pixels[] 320 Size of the Y axis in pixels[] 320 Image scale (in degrees/pixel)[] 0.25 Coordinate system (CEL - celestial, GAL -galactic) (CEL|GAL) [CEL] First coordinate of image center in degrees (RA or galactic l)[] 193.98 Second coordinate of image center in degrees (DEC or galactic b)[] -5.82 Rotation angle of image axis, in degrees[0.] Projection method e.g. AIT|ARC|CAR|GLS|MER|NCP|SIN|STG|TAN:[AIT] prompt> |

To display the binned counts map using the visualization tool ds9, enter :

| prompt> ds9 3C279_binned_counts_map.fits & |

3C279_binned_counts_map.png |

| From this image you can see that there are no strong sources near the central two sources, 3C 279 and 3C 273. |

Example: 3C279_binned_counts_map.fits (optional).

4. Download the Latest Background Models

When using the latest Galactic diffuse emission model (e.g., gll_iem_v02.fit) in a likelihood analysis, also use the corresponding model for the Extragalactic isotropic diffuse emission (which includes the residual cosmic-ray background). At the time this was written, November 03, 2010, the latest model was isotropic_iem_v02.txt.

- Download the following files from the Fermi LAT U33 Disk Browser:

- gll_iem_v02.fit

- isotropic_iem_v02.txt

- Place these files in the same directory with your data.

Notes:

- For more information, refer to the Diffuse Model for Analysis of LAT Data page in confluence.

- The isotropic spectrum is valid only for the P6_V3_DIFFUSE response functions and only for data sets with front + back events combined.

5. Create a Source Model XML File

The gtlike tool reads the source model from an XML file. (See gtlike Help and gtlike Parameters. The source model can be created using the model editor tool or by editing the file directly within a text editor. Review the help information in the ModelEditor to learn how to run this tool.

Note: Given the dearth of bright sources in the extraction region we have selected, our source model file will be fairly simple, comprising only the Galactic and Extragalactic diffuse emission, and point sources to represent the blazars 3C 279 and 3C 273. For this example, we use the diffuse background model as recommended by the LAT Team:

source model XML file:

For more details on the available XML models, see: Likelihood Tutorial: Unbinned – Create a Source Model XML File.

6. Create a 3D (Binned) Counts Map

For binned likelihood analysis, a three-dimensional counts map (counts cube) with an energy axis is required. The gtbin application performs this tool with the CCUBE option. (See gtbin Help and gtbin Parameters.)

The binning of the counts map determines the binning of the exposure calculation. The likelihood analysis may lose accuracy if the energy bins are not sufficiently narrow to accommodate more rapid variations in the effective area with decreasing energy below a few hundred GeV. For a typical analysis, ten logarithmically spaced bins per decade in energy are recommended. The analysis is less sensitive to the spatial binning and 0.2 deg bins are a reasonable standard.

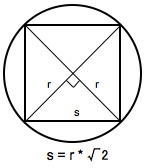

This counts cube is a square binned region that must fit within the circular acceptance cone defined during the data extraction step. This is the reason we expanded the data selection from 20 degrees (for unbinned analysis) to 40 degrees (for binned analysis). To find the maximum size of the region your data will support, find the side of a square that can be fully inscribed within your circular acceptance region (multiply the radius of the acceptance cone by sqrt(2)). For this example, the maximum length for a side is 56.56 degrees. This means the entire area covered by the smaller acceptance region (40 degree diameter) used in the unbinned analysis will be included in this analysis as well. For a comparable analysis here, we use the same central coordinate, and a 40x40 degree counts cube.

To create the counts cube, we run gtbin as follows:

prompt> gtbin |

Example: 3C279_binned_counts_cube.fits (optional).

7. Compute Livetimes

To speed up the exposure calculations performed by Likelihood, it is helpful to precompute the livetime as a function of sky position and off-axis angle. The gtltcube tool creates a livetime cube, which is a HealPix table, covering the full sky, of the integrated livetime as a function of inclination with respect to the LAT z-axis. See gtltcube Help and gtltcube Parameters.

Example of how to run gtltcubeprompt> gtltcube phibins=4 |

Note: Values such as "0.1" for "Step size in cos(theta)" are known to give unexpected results. Use "0.09" instead.

For more information about the livetime cubes see the Cicerone's Livetime and Exposure.

Example: 3C279_binned_ltcube_phi4.fits (optional).

8. Compute 3D Exposure Map (using gtexpcube2)

The gtexpcube2 tool creates exposure maps that correspond to the counts maps generated above; these exposure maps are then used in the likelihood analysis. The maps cover the same region of the sky as the counts maps and have separate planes for different energies spanning the energy range of the counts maps. (See gtexpcube2 Help and gtexpcube2 parameter documentation.)

Example of how to run gtexpcube2prompt> gtexpcube2 |

Example: bexpmap_phi4.fits (optional).

Note: As with the unbinned likelihood analysis, the exposure needs to be recalculated if the ROI, zenith angle, time, event class, or energy selections applied to the data are changed. For the binned analysis, this also includes the spatial and energy binning of the 3D counts map.

9. Compute Source Maps

The gtsrcmaps tool creates model counts maps for each source in the XML model. The model counts maps are the model intensity distributions multiplied by exposure and convolved with the effective PSF, for use with the binned likelihood analysis. (See gtsrcmaps Help and gtsrcmaps Parameters.)

Example of how to run gtsrcmaps:prompt> gtsrcmaps |

Example: 3C279_srcmaps.fits (optional).

Tip: Since source map generation for the point sources is fairly quick, and maps for many point sources may take up a lot of disk space, it may be preferable to skip precomputing the source maps for these sources at this stage. gtlike will compute these on the fly if they appear in the XML definition and a corresponding map is not in the source maps FITS file. (See gtlike Help and gtlike Parameters.)

To skip generating source maps for point sources, specify "ptsrc=no" on the command line when running gtsrcmaps. (See gtsrcmaps Help and gtsrcmaps Parameters.)

10. Run gtlike

Note: Prior to running gtlike for Unbinned Likelihood, it is necessary to calculate the diffuse response for each event (when that response is not precomputed). However, for Binned Likelihood analysis the diffuse response is calculated over the entire bin, so this step is not necessary.

Refer to gtlike Help and gtlike Parameters as necessary, and run the gtlike application. In the following example, most of the entries prompted for are fairly obvious. In addition to the various XML and FITS files, the user is prompted for a choice of IRFs, the type of statistic to use, the optimizer, and some output file names.

Note that the fitted parameters are saved to an output XML model file (3C279_output_model.xml) for later use:

gtlike:prompt> gtlike refit=yes plot=yes sfile=3C279_binned_output_model.xml |

The statistics available are:

- UNBINNED

In a standard unbinned analysis, such as the one described in this tutorial, the parameters for the spacecraft, event, and exposure files must be given.

- BINNED

In a binned analysis, the counts map and exposure hypercube files must be given, and the spacecraft, event, and exposure files are ignored.

Optimizers. There are five optimizers from which to choose:

- DRMNGB

- DRMNFB

- NEWMINUIT

- MINUIT

- LBFGS

Tip: Generally speaking, the faster way to find the parameters estimation is to:

- Use the DRMNGB (or DRMNFB) approach to find initial values.

- Then use MINUIT (or NEWMINUIT) to find more accurate results.

The application proceeds by reading in the spacecraft and event data, and if necessary, computing

the source maps.

Minuit did successfully converge. (OMITTED MANY OUTPUTS HERE...) Computing TS values for each source (4 total) Photon fluxes are computed for the energy range 100 to 100000 MeV 3C 273: 3C 279: EG_v02: GAL_v02: WARNING: Fit may be bad in range [100, 199.526] (MeV) Total number of observed counts: 103025 -log(Likelihood): 270514.6334 Writing fitted model to 3C279_binned_output_model.xml |

Since we selected 'plot=yes' in the command line, a plot of the fitted data appears:

3C279_binned_spectral_fit.png |

In the first plot:

The counts/MeV vs MeV are plotted. The points are the data, and the lines are the models. Error bars on the points represent sqrt(Nobs) in that band, where Nobs is the observed number of counts. The black line is the sum of the models for all sources. The colored lines follow the sources as follows:

- Black - summed model

- Red - first source in results.dat file (see below)

- Green - second source

- Blue - third source

- Magenta - fourth source

- Cyan - the fifth source

In this case:

- 3C273 = red

- 3C279 = green

- extragalactic background = blue

- galactic background = magenta

Note: If you have more sources, the colors are reused in the same order.

The second plot:

Gives the residuals between your model and the data:

Error bars here represent (sqrt(Nopbs))/Npred, where Npred is the predicted number of counts in each band based on the fitted model.

To assess the quality of the fit:

- Look for the words at the top of the output "<Optimizer> did successfully

converge." Successful convergence is a minimum requirement for a good fit.

- Next, look at the energy ranges that are generating warnings of bad fits. If any of these ranges affect your source of interest, you may need to revise the source model and refit.

- You can also look at the residuals on the plot (bottom panel). If the residuals indicate a poor fit overall (e.g., the points trending all low or all high), consider changing your model file, perhaps by using a different source model definition, and refit the data.

- If the fits and spectral shapes are good, but could be improved, you may wish to simply update your model file to hold some of the spectral parameters fixed.

For example, by fixing the spectral model for 3C 273, you may get a better quality fit for 3C 279.

Closing the plot:

When you close the plot, you will be asked if you wish to refit the data.

Hitting 'return' will instruct the application to fit again.

We are happy with the result, so we type 'n' and end the fit.

Refit? [y] |

When it completes, gtlike generates two standard output files:

- results.dat with the results of the fit, and

- counts_spectra.fits with predicted counts for each source for each energy bin.

The points in the plot above are the data points from the counts_spectra.fits file, while the colored lines follow the source model information in the results.dat file.

If you rerun the tool in the same directory, these files will be overwritten by default. Use the clobber=no option on the command line to keep from overwriting the output files.

Tip: The fit details and the value for the -log(likelihood) are not recorded in the automatic output files. You should therefore consider logging the output to a text file for your records by using "> fit_data.txt" (or something similar) with your gtlike command:

prompt> gtlike refit=yes plot=yes sfile=3C279_output_model.xml > fit_data.txt |

In the above example:

Output XML File. The 'sfile' parameter to request that the model results be written to an output XML file (3C279_binned_output_model.xml). This file contains the source model results that were written to results.dat at the completion of the fit. If you have specified an output XML model file and you wish to modify your model while waiting at the 'Refit? [y]' prompt, you will need to copy the results of the output model file to your input model before making those modifications.

If you do not specify a source model output file with the 'sfile' parameter NO input xml file is written and nothing new is generated.

Scale. The results of the likelihood analysis have to be scaled by the quantity called "scale" in the XML model in order to obtain the total photon flux (photons cm-2 s-1) of the source. You must refer to the model formula of your source for the interpretation of each parameter. (See Create a Source Model XML File.)

In the example, the 'prefactor' of our power law model of 3C273 has to be scaled by the factor 'scale'=10-9. For example the total flux of 3C273 is the integral between 100 MeV and 100000MeV of:

Prefactor x scale x (E /100)index=(12.5052x10-9) * (E/100)-2.75194

Errors reported with each value in the results.dat file are 1σ estimates (based on inverse-Hessian at the optimum of the log-likelihood surface).

Tip: To fetch the index and error from an unbinned analysis fit: model = like.model for 'self.sourceName', just substitute a string containing the exact source name in the model.xml file. For more "fetch" tips, see Fetching Fit Parameters. |

Other Useful Hidden Parameters:

If you are scripting and wish to generate multiple output files without overwriting, the 'results' and 'specfile' parameters allow you to specify output filenames for the results.dat and counts_spectra.fits files, respectively.

11. Create a Model Map

For comparison to the counts map data, we create a model map of the region based on the fit parameters. This map is essentially an infinite-statistics counts map of the region-of-interest based on our model fit.- The gtmodel application reads in the fitted model, applies the proper scaling to the source maps, and adds them together to get the final map. (See gtmodel Help and gtmodel Parameters.)

prompt>gtmodel outtype=ccube |

Example: 3C279_model_map.fits (optional).

- In order to compare the model results to the data, the two maps must have the same geometry, so it is necessary to again generate a counts map for the modeled region using gtbin. (See gtbin Help and gtbin Parameters.)

prompt> gtbin |

Example: 3C279_binned_counts_map_fitted.fits (optional).

- At the prompt, enter:

ds9 3C279_model_map.fits 3C279_binned_counts_map_fitted.fits

to display 3C279_model_map.fits and 3C279_binned_counts_map_fitted.fits

side-by-side:

| Model map plotted next to the the energy-summed counts map for the data: 3C279_binned_map_comparison.png |

- To understand how well the fit matches the data, subtract the model map from the counts map using the FTOOL farith:

prompt> farith |

Example: 3C279_residual.fits (optional).

- At the prompt, enter: ds9 and display 3C279_residual.fits as shown below (see ds9).

| Note: Region annotations were made on the following image was made using SAOImage ds9 v 6.2 which, at the time of this writing (01/07/2011), is not currently available on SLAC Linux. |

3C279_binned_residuals.png |

Things to note:

The next step would be to iterate the analysis with the additional source included in the XML model file. |

| Owned by: Jim Chiang |

| Last updated by: Chuck Patterson 02/01/2011 |