International Committee for Future

Accelerators (ICFA)

Standing Committee on Inter-Regional

Connectivity (SCIC)

Chairperson: Professor Harvey Newman, Caltech

ICFA SCIC Network Monitoring Report

Prepared by the ICFA SCIC Monitoring Working

Group

On behalf of the Working Group:

Les Cottrell cottrell@slac.stanford.edu

Internet performance is improving each year with packet losses typically

improving by 40-50% per year and Round Trip Times (RTTs) by 10-20% and, for some

regions such as S. E. Europe, even more. Geosynchronous satellite connections are

still important to countries with poor telecommunications infrastructure. In

general for HEP countries satellite links are being replaced with land-line

links with improved performance (in particular for RTT).

Links between the more developed regions including Anglo America, Japan and

Europe are much better than elsewhere (5 - 10 times more throughput achievable).

Regions such as S.E. Europe, Central Asia and Russia are catching up with the more developed regions

(at the present rate of progress they should catch up by the end of the decade). However, China,

the Middle East, Latin America and Africa are several years behind in

performance compared to the more developed regions, and do not appear to be

catching up. Even worse India appears to be falling further behind.

For modern HENP collaborations and Grids there is an increasing need for

high-performance monitoring to set expectations, provide planning and

trouble-shooting information, and to provide steering for applications

To quantify and help bridge the Digital Divide, enable world-wide

collaborations, and reach-out to scientists world-wide, it is imperative to

continue and extend the monitoring coverage to all countries with HENP programs

and significant scientific enterprises. This in turn will require help from ICFA

to identify sites to monitor and contacts for those sites, plus identifying

sources of on-going funding support to continue and extend the monitoring.

The formation of this working group was requested at the ICFA/SCIC

meeting at CERN in March 2002 [icfa-mar02]. The mission is to: Provide

a quantitative/technical view of inter-regional network performance to enable

understanding the currrent situation and making recommendations for improved

inter-regional connectivity.

The lead person for the monitoring working group was identified as Les

Cottrell. The lead person was requested to gather a team of people to assist in

preparing the report and to prepare the current ICFA report for the end of 2002.

The team membership consists of:

| Les Cottrell |

SLAC |

US |

cottrell@slac.stanford.edu |

| Richard Hughes-Jones |

University of Manchester |

UK |

rich@a3.ph.man.ac.uk |

| Sergei Berezhnev |

RUHEP, Moscow State.Univ. |

Russia |

sfb@radio-msu.net |

| Sergio F. Novaes |

FNAL |

S. America |

novaes@fnal.gov |

| Fukuko Yuasa |

KEK |

Japan and E. Asia |

Fukuko.Yuasa@kek.jp |

| Sylvain Ravot |

Caltech |

CMS |

Sylvain.Ravot@cern.ch |

| Daniel Davids |

CERN |

CERN, Europe, LHC |

Daniel.Davids@cern.ch |

| Shawn McKee |

Michigan |

I2 HENP Net Mon WG |

smckee@umich.edu |

- Obtain as uniform picture as possible of the present performance of the

connectivity used by the ICFA community

- Prepare reports on the performance of HEP connectivity,

including, where possible, the identification of any key bottlenecks or

problem areas.

This report may be regarded as a follow on to the May 1998 Report of the

ICFA-NTF Monitoring Working Group [icfa-98] and the

January

2003 Report of the ICFA-SCIC Monitoring Working Group [icfa-03]. The current report

updates the January 2003 report, but is complete in its own right in that it

includes the tutorial information from the January 2003 report.

There are two complementary types of Internet monitoring

reported on in this report.

- In the first we use PingER [pinger] which

uses the ubiquitous "ping" utility available standard on most modern hosts.

Details of the PingER methodology can be found in the May 1998 Report of the

ICFA-NTF Monitoring Working Group [icfa-98] and [ejds-pinger]. PingER provides low

intrusiveness (~ 100bits/s per host pair monitored1) Round Trip Time (RTT),

loss, reachability (if a host does not respond to a set of 21 pings it is

presumed to be non-reachable). The low intrusiveness enables the method to be

very effective for measuring regions and hosts with poor connectivity. Since

the ping server is pre-installed on all remote hosts of interest, minimal

support is needed for the remote host (no software to install, no account

needed etc.)

- The second method (IEPM-BW

[iepm]) is for measuring high network and application throughput between

hosts with excellent connections. Examples of such hosts are to be found at

HENP accelerator sites and tier 1 and 2 sites, major Grid sites, and major academic and

research sites in Anglo America2, Japan and Europe. The method is

quite intrusive (for each remote host being monitored from a monitoring host,

it can utilize hundreds of Mbits/s for ten seconds or more each hour). It also

requires more support from the remote host. In particular an account is

required, software (servers) must be installed, disk space, compute cycles

etc. are consumed, and there are security issues. The method provides

expectations of throughput achievable at the network and application levels,

as well as information on how to achieve it, and trouble-shooting

information.

The PingER data and results extend back to the start of 1995. They thus

provide a valuable history of Internet performance. There (December 2003) are about 33

monitoring hosts in 12 countries, monitoring over 850 remote hosts at 560 sites

in over 100 countries (see PingER

Deployment [pinger-deploy]). These countries contain over 78% of the world's

population and over 90% of the online users of the Internet. Most of the

hosts monitored are at educational or research sites. We try and get at least 2

hosts per country to help identify and avoid anomalies at a single host. The

requirements for

the remote host can be found in [host-req].

Since there are over 3700 monitoring/monitored-remote-host pairs, it is important to

provide aggregation of data by hosts from a variety of "affinity groups". PingER

provides aggregation by affinity groups such as HENP experiment

collaborator sites, Top Level Domain (TLD), Internet Service Provider

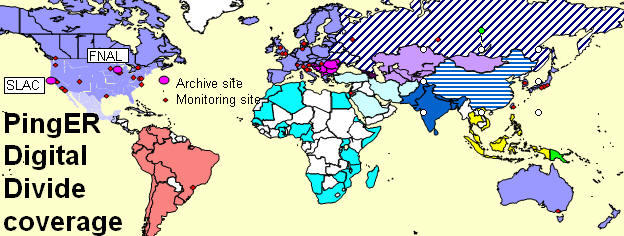

(ISP), or by world region etc. The world regions are shown below in Table 1.

They are chosen starting from the U.N. definitions [un]. We

modify the region definitions to take into account which countries have HENP

interests and to try and ensure the countries in a region have similar

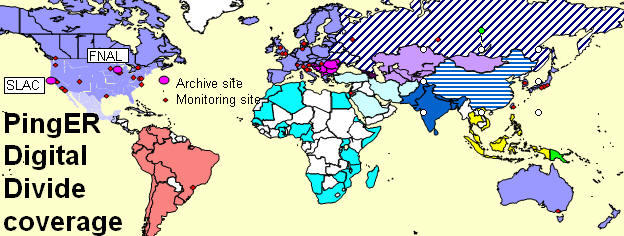

performance. The regions and countries monitored by PingER are shown in Fig. 1,

together with the monitoring and archive sites.

|

|

Figure 1: Major regions of the world for PingER

aggregation by regions |

The major regions are: Anglo America (2), Latin America (14), Europe (24), S.E. Europe

(9),

Africa (21), Mid East (7), Caucasus (3), Central Asia (8), Russia includes

Belarus & Ukraine (3), S. Asia (7), China (1) and

Australasia (2). The numbers in parentheses are the number of countries

monitored by PingER in the region.. We also subdivide regions at times to provide better granularity. The major sub-regions are

obtained by separating: Central America and the Carribean (5) from S. America

(9); Israel from the Mid-East, the Baltic States from Europe. For the purposes

of characterizing the Digital Divide we also aggregate Anglo America, Europe,

Australasia, Japan, Taiwan and S. Korea into the Developed Countries..

To assist in interpreting the results in terms of their impact on well-known

applications, we categorize the RTTs, losses etc. into quality ranges.

These are shown below in Table 2.

Table 2: Quality ranges used for loss and RTT

| |

Excellent |

Good |

Acceptable |

Poor |

Very Poor |

Bad |

| Loss |

<0.1% |

>=0.1% & &

< 1% |

> =1%

& < 2.5% |

>= 2.5%

& < 5% |

>= 5%

& < 12% |

> 12% |

| RTT |

|

<62.5ms |

>=62.5ms

& < 125ms |

>= 125ms

& < 250ms |

>=250ms

& < 500ms |

>500ms |

More on the effects of packet loss and

RTT can be found in the Tutorial

on Internet Monitoring & PingER at SLAC [tutorial], briefly:

- At losses of 4-6% or more video-conferencing becomes irritating and

non-native language speakers become unable to communicate. The occurrence of

long delays of 4 seconds (such as may be caused by timeouts in recovering from

packet loss) or more at a frequency of 4-5% or more is also irritating for

interactive activities such as telnet and X windows. Conventional wisdom

among TCP researchers holds that a loss rate of 5% has a significant adverse

effect on TCP performance, because it will greatly limit the size of the

congestion window and hence the transfer rate, while 3% is often substantially

less serious, Vern Paxson. A random loss of 2.5% will result in Voice

Over Internet Protocols (VOIP) becoming slightly annoying every 30 seconds or

so. A more realistic burst loss pattern will result in VOIP distortion going

from not annoying to slightly annoying when the loss goes from 0 to 1%. Since

TCP throughput for the standard (Reno based) TCP stack goes as 1/(sqrt(loss)

[mathis]) (see M. Mathis, J. Semke, J. Mahdavi, T. Ott, "The Macroscopic

Behavior of the TCP Congestion Avoidance Algorithm",Computer

Communication Review, volume 27, number 3, pp. 67-82, July 1997), it is

important to keep losses low for achieving high throughput.

- For RTTs, studies in the late 1970s and early 1980s showed that one needs

< 400ms for high productivity interactive use. VOIP requires a RTT of <

250ms or it is hard for the listener to know when to speak.

It must be

understood that these quality designations apply to normal Internet use. For

high performance, and thus access to data samples and effective partnership in

distributed data analysis, much lower packet loss rates may be required.

Loss

Of the two metrics loss & RTT, loss is more critical since a loss of a

packet will typically cause timeouts that can last for several seconds. Fig. 2

shows the loss measured from ESnet sites (ANL, BNL, DoE, ESnet NOC, FNAL, LANL,

& SLAC) to various regions of the world.

|

|

|

Figure 2: Monthly packet loss as a function of time, seen

from ESnet sites to various regions of the world. The numbers in

parentheses indicate the number of monitor site / remote site pairs

contributing to the medians. The orange dots show 50%

improvement/year. |

The following observations can be made:

- The improvement in losses is about 10-50% per year.

- The best networks are achieving better than 0.1% packet for most of their

sites seen from ESnet.

- Russia, S.E. Europe and China are several years behind Europe and

Anglo America.

Another way of looking at the losses is to see how many hosts fall in the

various loss quality categories defined above as a function of time. An example

of such a plot is seen in Fig 3.

|

|

|

Figure 3: Number of hosts measured from SLAC for each

quality category from Jan 2000 through December 2003. |

It can be seen that recently most sites fall in the good quality category.

Also the number of sites with good quality has increased from about 50% to about

60% in the the

2.5 years displayed. The plot also shows the total number of hosts monitored

from SLAC at various times. Towards the end of 2001 the number started dropping

as sites blocked pings due to security concerns. The rate of blocking is such

that out of 214 host that were pingable in July 2003, 33 (~15%) were no longer pingable in December 2003.

The increases towards the end of 2002 and early 2003 was due to help from the

Abdus Salam Institute of Theoretical Physics (ICTP). The ICTP held a Round Table meeting on Developing

Country Access to On-Line Scientific Publishing: Sustainable Alternatives

[ictp] in Trieste in November 2002 that included a Proposal

for Real time monitoring in Africa [africa-rtmon]. Following the meeting a

formal declaration was made on RECOMMDENDATIONS

OF the Round Table held in Trieste to help bridge the digital divide

[icfa-rec]. The PingER project is collaborating with the ICTP to develop a

monitoring project aimed at better understanding and quantifying the Digital

Divide. On December 4th the ICTP electronic Journal Distribution Service (eJDS)

sent an email entitled Internet

Monitoring of Universities and Research Centers in Developing Countries

[ejds-email] to their collaborators informing them of the launch of the

monitoring project and requesting participation. By January 14th 2003, with the

help of ICTP, we added about 23 hosts in about 17 countries including:

Bangladesh, Brazil, China, Columbia, Ghana, Guatemala, India (Hyderabad and Kerala), Indonesia, Iran, Jordan, Korea, Mexico, Moldova, Nigeria, Pakistan,

Slovakia and the Ukraine.

The increase towards the end of 2003 was spurred by preparations for the

second Open Round Table on

Developing Countries Access to Scientific Knowledge: Quantifying the Digital

Divide 23-24 November Trieste, Italy and the WSIS conference and

associated activities in

Geneva December 2003.

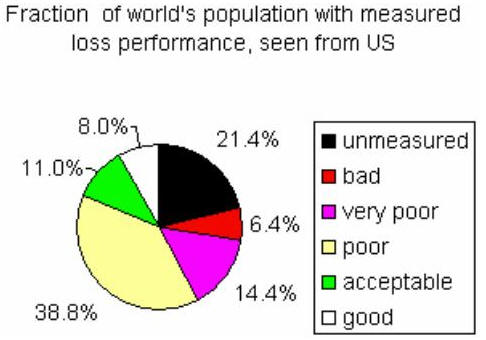

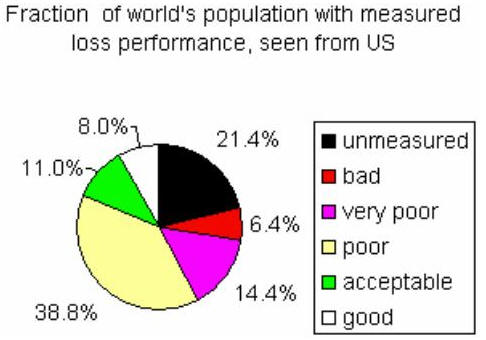

Fig. 4 shows the world's connected population fractions obtained by dividing countries up

by loss quality seen from the US, and adding the connected populations for the countries

(we obtained the population/country figures from "How many Online" [nua])

for a given loss quality to get a fraction compared to the total world's

connected population.

|

| Figure 4: Fraction of the world's connected population in

countries with measured loss performance in 2001 and Dec '2003 |

It can be seen that in 2001, <20% of the population lived in countries

with good to acceptable packet loss. By December 2003 this had risen to 75%. The

coverage of Pinger has also increased from about 70 countries at the start of

2003 to over 100 in January 2004. This in turn reduced the fraction of the

connected population for which PingER has no measurements.

RTTs

Unfortunately there are limits to the minimum RTT due to the speed of light

in fibers or electricity in copper. Typically today, the minimum RTTs for

terrestrial circuits are about 2 * distance / (0.6 * (0.6 * c)), where

c is the velocity of light, the factor of 2 accounts for the round-trip,

0.6*c is roughly the speed of light in

fiber and the extra 0.6 allows roughly for the delays in network equipment such

as routers. For geostationary satellites links the minima are between 500 and

600ms. For U.S. cross country links (e.g. from SLAC to BNL) the ypical minimum RTT

(i.e. a packet sees no queuing delays) is about 70 msec.

The RTTs seen from ESnet monitoring sites (in U.S.) to hosts in various regions

are seen in Fig. 6.

|

|

|

Figure 6: RTTs seen from ESnet sites to various regions of

the world. Edu indicates hosts in the .edu Top Level Domain (TLD), i.e.

mainly U.S. universities. The straight lines are fits to exponential

functions to help guide the eye. The orange lines are for 10 & 20%

improvements (reductions) in RTT/year. |

It is seen that Anglo American and European sites have been improving by

between 10 & 20% per year. Japan and Russia are improving at a slower rate and

S. E. Europe & mainland China at a faster rate. The improvements are due to

faster links (less time clocking the bits in and out of the network equipment),

faster routers and improved routes.

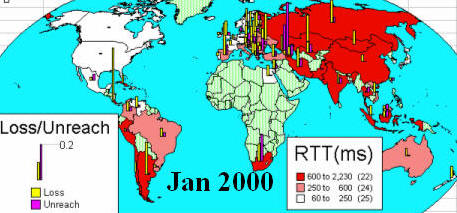

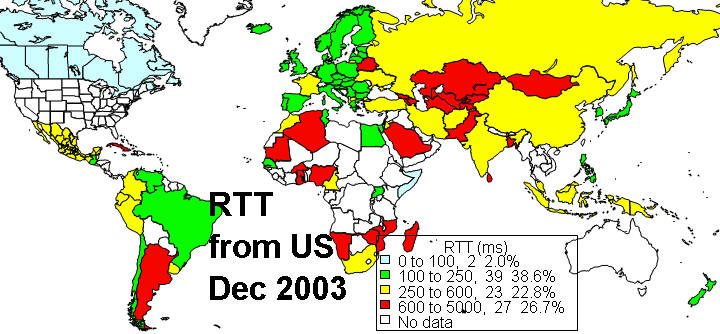

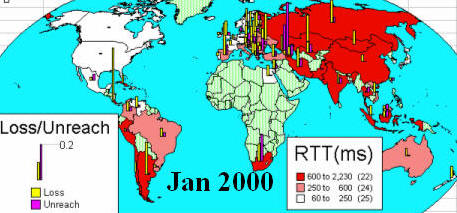

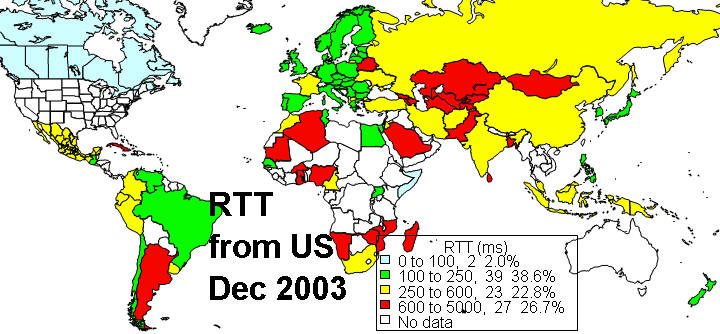

Fig. 7, shows the RTT from U.S. to the world in January 2000 and December

2003.

It also indicates which countries of the world contain sites that are monitored

(in the Jan 2000 map countries in green are not monitored, in the Dec 2003 apart

from the US unmonitored countries are left white).

|

|

|

Figure 7: Average monthly RTT measured from U.S to various

countries of the world for January 2000 and August 2002. Countries shaded

green are not measured. |

It is seen that the number of countries with satellite links (> 600ms RTT

or dark red) has decreased markedly in the 3 years shown. Today satellite

links are used in places where it is hard or unprofitable to pull

terrestrial-lines (typically fibers) to.

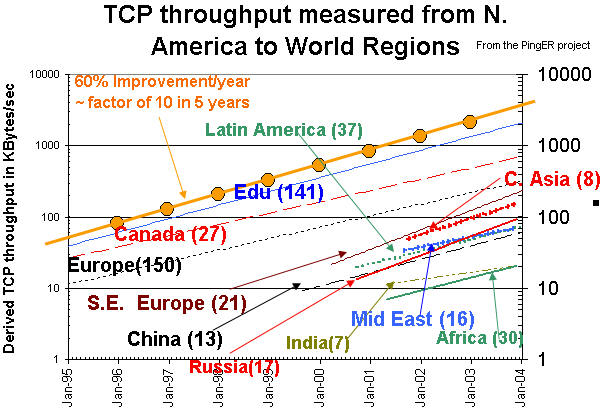

Throughput

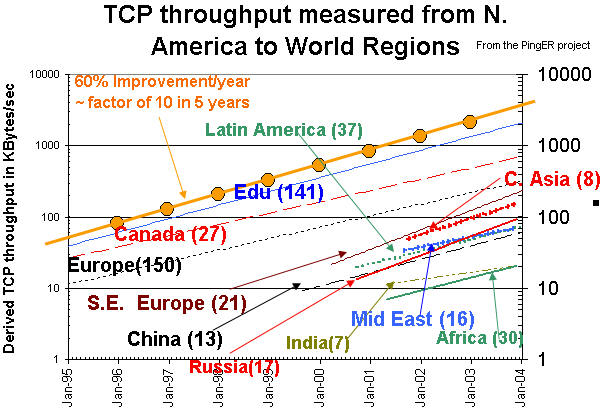

We also combine the loss and RTT measurements using throughput =

1460Bytes[Max Transmission Unit]/(RTT * sqrt(loss)) from [mathis]. The results are shown in Fig. 8.

The orange line shows a ~60% improvement/year or about a factor of 10 in 5 years.

|

|

| Figure 8: Derived throughput as a function

of time seen from ESnet sites to various regions of the

world. The numbers in parentheses are the number of monitoring/remote host

pairs contributing to the data. The lines are exponential fits to the data. |

The data for several of the developing countries only extends back a few months

and so some care must be taken in interpreting the long term trends. With this

caveat, it can be seen that the

performances to regions such as S.E. Europe, Russia, and Central Asia are

catching up to the more developed countries, the Middle East, Africa, Latin

America and China only appear to be keeping up, while India appears to be

falling further behind. At the same time it is seen that the Middle East, Latin

America and China are about 6 years behind Europe with throughputs five or more

times less, and India and Africa are 8 years behind with throughputs 15 times

lower than those for Europe. In fact sites in Africa and India appear to

have throughputs less that that of a well connected (cable, DSL or ISDN) home in

Europe or Anglo America. For more on Africa see Connectivity

Mapping in Africa [ictp-jensen], African Internet

Connectivity [africa] and

Internet Performance to Africa [ejds-africa]). We are measuring mainly to

academic and research sites and not to many commercial sites. This may account

for the poor performance observed for India where there are many companies (such

as Oracle, Microsoft, Cisco) with probably excellent connectivity to which we

are not making measurements.View from Europe

To assist is developing a less N. American view of the Digital Divide, we

started adding many more hosts in developing countries to the list of hosts

monitored from CERN. We now have data going back for almost two and a half

years that enables us to make some statements about performance as seen from

Europe.

|

| Figure 9: Derived throughputs to various regions as seen from CERN. |

The sudden drop in throughput for the Middle East, is an artifact caused by

initially only monitoring hosts in 2 Middle East countries (Israel and Egypt)

with one (Israel) having markedly better performance (factor of 20) than

anywhere else in the Middle East. As we added hosts in more Middle East

Countries (starting in July 2003), the median dropped dramatically as Israel had

less effect. A similar effect can be seen for S.E. Europe where the dominating

countries were initially Croatia and Slovenia, that in July 2003 were joined by

Moldova, Macedonia and Romania. We expect to add several hosts to both regions

based on hosts being successfully monitored from SLAC. The orange lines indicate

improvements of 50% and 100% per year to help guide the eye. The other lines are

exponential fits to the data. It can be seen that there are large spread in the

data points from month to month. Apart from the special cases of the Middle East

and S.E. Europe mentioned above, the trends are similar to those seen from

ESnet: the improvements are between 50% and 100% per year; Russia, S. E. Europe

are catching up with N. America; Latin America and China are keeping up; Africa

and India are falling behind. There is insufficient data at the moment to

indicate how far the various regions are behind N. America or how long it will

take to catch up.

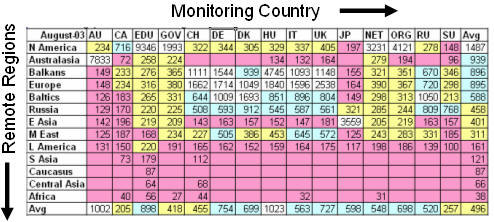

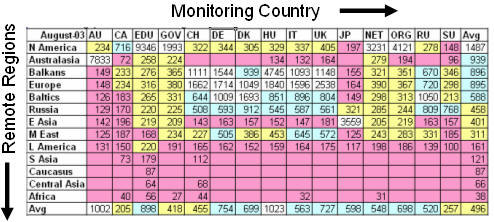

Variability of performance between and within regions

The throughput results, so far presented in this report, have been measured from

Anglo America or to a lesser extent from Europe. This is partially since there

is more data for a longer period available for the Anglo America monitoring

hosts. Table 3 shows the throughputd seen between monitoring and monitored hosts

in the major regions of the world. Each column is for monitoring hosts in a

given region, each row is for monitored hosts in a given region. The cells are

colored according to the median quality for the monitoring region/monitored

region pair. White is for derivedf throughputs > 1000 Kbits/s (good), blue for

<= 1000 Kbits/s & >500Kbits/s (acceptable), yellow for <= 500Kbits/s and > 200

Kbits/s, and magenta for <= 200Kbits/s (very poor to bad). The table is

ordered by decreasing average performance. The Monitoring countries are identified by the 2 character TLD. The .ORG site is JLab. The .NET sites are APAN in Japan

and the ESnet NOC at LBNL. The .GOV sites are ESnet sites (ANL, BNL, DOE-HQ,

FNAL, and LANL).

S. Asia is the Indian sub-continent; S.E. Asia is composed of measurements to

Indonesia, Malaysia, Singapore, Thailand and Vietnam.

| Table 3: Derived throughputs in kbits/s from monitoring hosts to

monitored hosts by region of the world for August 2003. |

|

|

There are a couple of anomalies: the Mid East measurements are almost

entirely composed of measurements to Israel; the Caucasus measurements are to 2

countries with very different performance: Azerbaijan (50% loss) and Georgia (3%

loss). It can be seen that in general performance is good to acceptable. Regions

with very poor to bad performance include Africa, S. Asia (India), the Caucasus region

and S. America. Though not broken out here, performance to S.E. Asia

is generally poor.

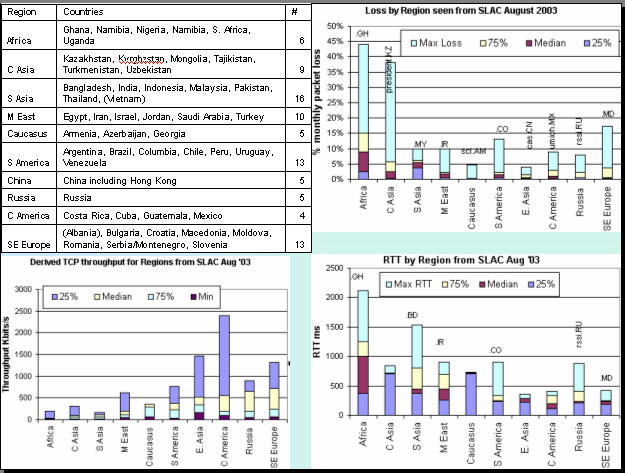

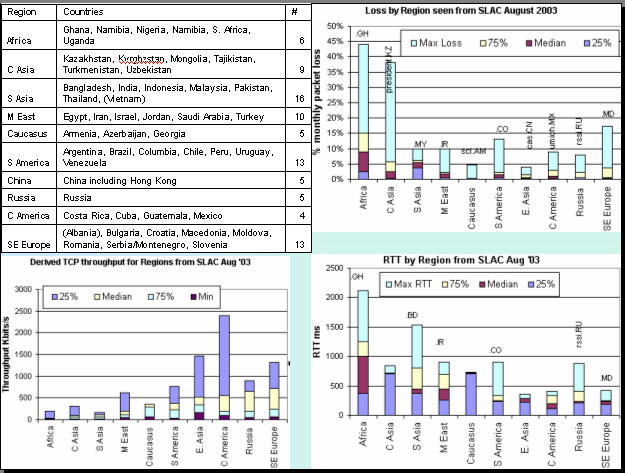

To provide further insight into the variability in

performance for various regions of the world seen from SLAC Figure 10 shows

various statistical measures of the RTTs, losses and derived throughputs (the

definitions of the countries comprising a regions are also shown).

|

|

| Figure 10: Maximum, 75 percentile, median and 25 percentile RTTs,

losses and derived throughputs for various regions

measured from SLAC for August 2003. |

When there are large outliers, the sites/countries with the maxima are

indicated There are quite a lot of regions with outliers. Ghana (.GH) is

particularly poor for Africa. The Caucasus & C. Asia much more uniform now that

the virtual Silk Road project is in place, however, the virtual Silk Road does

not serve president.KZ so Kazakhstan (.KZ) stands out. For S. America,

performance is improving since the AMPATH project startede providing services.

However, AMPATH does not reach Colombia (.CO) at the moment. Other countries

that stand out as being particularly poor for their regions are Iran (.IR) and

Moldova (.MD), and within Russia (.RU) the RSSI has particularly poor

performance.

The PingER method of measuring throughput breaks down for high

speed networks due to the different nature of packet loss for ping compared to

TCP, and also since PingER only measures about 14,400 pings of a given

size/month between a given monitoring host/monitored host pair. Thus if the link

has a loss rate of better than 1/14400 the loss measurements will be inaccurate.

For a 100Byte packet, this is equivalent to a Bit Error Rate (BER) of 1 in 108,

and leading networks are typically better than this today (Jan 2004). This is partially the reason the ESnet and Abilene losses appear to have

leveled out since 2000 in Fig. 2. For example if the loss probability is <

1/14400 then we take the loss as being 0.5 packet to avoid a division by zero,

so if the average RTT for ESnet is 50msec then the maximum throughput we can use

PingER data to predict is ~ 1460Bytes*8bits/(0.050sec*sqrt(0.5/14400)) or ~

40Mbits/s and for an RTT of 200ms this reduces to 10Mbits/s.To address this challenge and to understand and provide monitoring of high

performance throughput between major sites of interest to HEP and the Grid, we

developed the IEPM-BW monitoring infrastructure and toolkit. There are about 10 monitoring hosts and about 50 monitored hosts in 9

countries (CA, CH, CZ, FR, IT, JP, NL, UK, US). Both application (file copy and

file transfer) and TCP throughputs are measured.

These measurements indicate that throughputs of several hundreds of Mbits/s

are achievable on today's production academic and research networks, using

common off the shelf hardware, standard network drivers, TCP stacks etc.,

standard packet sizes etc. Achieving these levels of throughput requires care in

choosing the right configuration parameters. These include large TCP buffers and

windows, multiple parallel streams, sufficiently powerful cpus (typically better

than 1 MHz/Mbit/s), fast enough interfaces and busses, and a fast enough link

(> 100Mbits/s) to the Internet. In addition for file operations one needs

well designed/configured disk and file sub-systems.

Though not strictly monitoring, there is currently much activity in

understanding and improving the TCP stacks (e.g. [floyd], [low], [ravot]). In

particular with high speed links (> 500Mbits/s) and long RTTs (e.g.

trans-continental or trans-oceanic) today's standard TCP stacks respond poorly

to congestion (back off too quickly and recover too slowly). To partially

overcome this one can use multiple streams or in a few special cases large

(>> 1500Bytes) packets. In addition new applications ([bbcp], [bbftp],

[gridftp]) are being developed to allow use of larger windows and multiple

streams as well as non TCP strategies ([tsnami]). Also there is work to

understand how to improve the operating system configurations

[os] to improve the throughput performance. As it becomes increasingly possible

to utilize more of the available bandwidth, more attention will need to be paid

to fairness and the impact on other users (see for example [coccetti] and [bullot]). Besides ensuring the fairness of TCP

itself, we may need to deploy and use quality of service techniques such as QBSS

[qbss] or TCP stacks that back-off prematurely hence enabling others to utilize

the available bandwidth better [kuzmanovic]. These subjects will be covered in more detail in the companion ICFA-SCIC

Advanced Technologies Report. We note here that monitoring infrastructures such

as IEPM-BW can be effectively used to measure and compare the performance of TCP

stacks, measurement tools, applications and sub-components such as disk and file

systems and operating systems in a real world environment.

Pinger and IEPM-BW are excellent systems for monitoring the general health

and capability of the existing networks used worldwide in HEP. However, we need

additional end-to-end tools to provide individuals with the capability to

quantify their network connectivity along specific paths in the network and also

easier to use top level navigation/drill-down tools. The former are

needed to both ascertain the user's current network capability as well as to

identify limitations which may be impeding the users ultimate (expected) network

performance. The latter are needed to simplify finding the relevant data.

Most HEP users are not a "network wizard" and don't wish to become one.

Because of HEP's critical dependence upon networks to enable their global

collaborations and grid computing environments, it is extremely important that

more user specific tools be developed to support these physicists.

Efforts are underway in the HENP community, in conjunction with the

Internet2 End-to-End (E2E) Performance

Initiative [E2Epi], to develop and deploy a network measurement and diagnostic

infrastructure which includes end hosts as test points along end-to-end paths in

the network. The E2E

piPEs project [PiPES], the NLANR/DAST

Advisor project [Advisor] and the EMA

(End-host Monitoring Agent) [EMA] are all working together to help develop an

infrastructure capable of making on demand or scheduled measurements along

specific network paths and storing test results and host details for future

reference in a common data architecture. The information format will utilize the

GGF NMWG [NMWG] schema to provide portability for the results. This information could

be immediately used to identify common problems and provide solutions as well as

to acquire a body of results useful for baselining various combinations of

hardware, firmware and software to define expectations for end users.

A primary goal is to provide as "lightweight" a client component as possible

to enable widespread deployment of such a system. The EMA Java Web Start client

is one example of such a client, and another is the

Network Diagnostic Tester (NDT)

tool [NDT]. By using Java and Java Web Start, the most

current testing client can be provided to end users as easily as opening a web

page. The current version supports both Linux and Windows clients.

Details of how the data is collected, stored, discovered and queried are

being worked out. A demonstration of a preliminary system is being shown at the

Internet2 Joint-techs meeting in

Hawaii on January 25th, 2004.

The goal of easier to use top level drill down navigation to the measurement

data is being tackled by MonALISA

[MonALISA] in collaboration with the IEPM project.

A long term goal is to merge Pinger and IEPM-BW results into a larger

distributed database architecture for use by grid scheduling and network

diagnostic systems. By combining general network health and performance

measurement with specific end-to-end path measurements we can enable a much more

robust, performant infrastructure to support HEP worldwide and help bridge the

Digital Divide.

Recent studies of HEP needs, for example the TAN Report (http://gate.hep.anl.gov/lprice/TAN/Report/TAN-report-final.doc)

have focused on communications between developed regions such as Europe and

Anglo America. In such reports packet loss less than 1%, vital for

unimpeded interactive log-in, is assumed and attention is focused on bandwidth

needs and the impact of low, but non-zero, packet loss on the ability to exploit

high-bandwidth links. The PingER results show clearly that much of the

world suffers packet loss impeding even very basic participation in HEP

experiments and points to the need for urgent action.

The PingER throughput predictions based on the Mathis

formula assume that throughput is mainly limited by packet

loss. The 60% per year growth curve in figure 8 is somewhat lower than the 79% per year growth in future needs

that can be inferred from the tables in the TAN Report. True throughput

measurements have not been in place for long enough to measure a growth

trend. Nevertheless, the throughput measurements, and the trends in

predicted throughput, indicate that current attention to HEP needs between

developed regions could result in needs being met. In contrast, the

measurements indicate that the throughput to less developed regions is likely to

continue to be well below that needed for full participation in future

experiments.

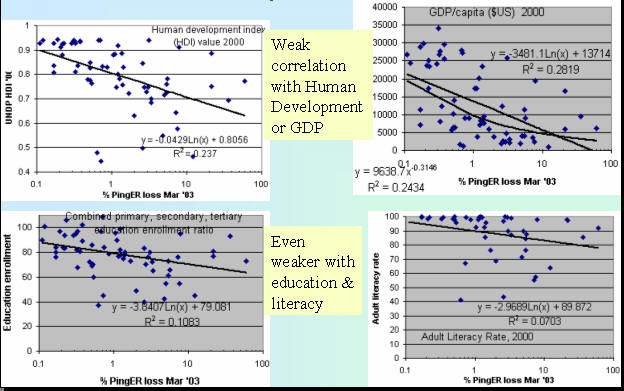

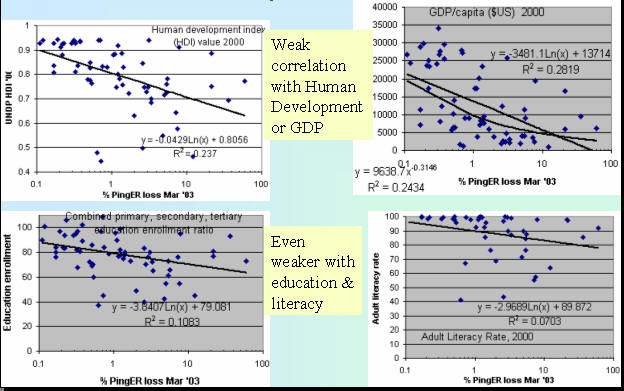

Various economic indicators have been developed by the U.N. and the

International Telecommunications Union (ITU). It is interesting to see how well

the PingER performance indicators correlate with the economic indicators. The

comparisons are particularly interesting in cases where the agreement is poor,

and may point to some interesting anomalies or suspect data.

The Human Development Index (HDI) is a summary measure of human development

(see

http://hdr.undp.org/reports/global/2002/en/ ). It measures the average

achievements in a country in three basic dimensions of human development:

- A long and healthy life, as measured by life expectancy at birth

- Knowledge, as measured by the adult literacy rate (with two-thirds weight)

and the combined primary, secondary and tertiary gross enrolment ratio (with

one-third weight)

- A decent standard of living, as measured by GDP per capita (PPP US$).

|

| Figure 11: Comparisons of PingER losses seen from N.

America to various countries versus various U.N. Development Programme (UNDP)

indicators. |

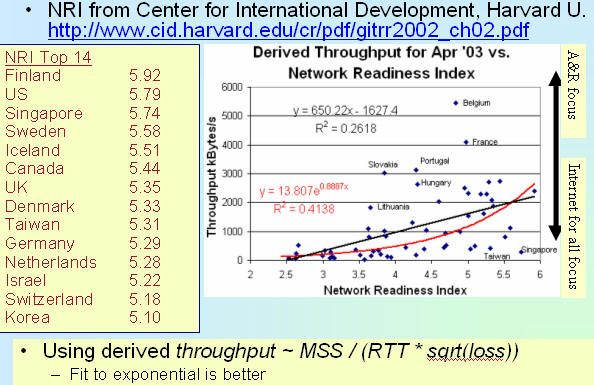

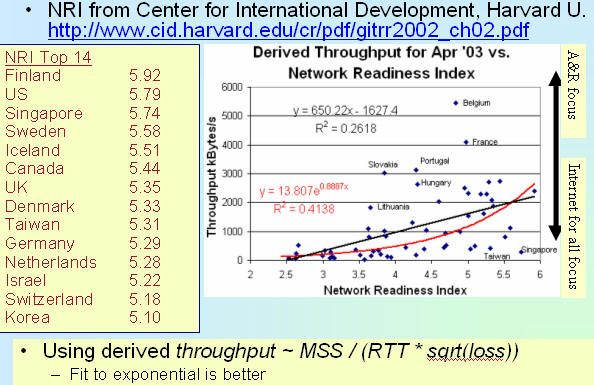

The Network Readiness Index (NRI) from the Center for International

Development, Harvard University (see

http://www.cid.harvard.edu/cr/pdf/gitrr2002_ch02.pdf ) is a major

international assessment of countries’ capacity to exploit the opportunities

offered by Information and Communications Technologies (ICTs), i.e. a

community’s potential to participate in the Networked World of the future. The

goal is to construct a network use component that measures the extent of current

network connectivity, and an enabling factors component that measures a

country’s capacity to exploit existing networks and create new ones. Network use

is defined by 5 variables related to the quantity and quality of ICT use.

Enabling factors are based on Network access, network policy, networked society

and the networked economy.

|

| Figure 12: PingER throughputs measured from N. America vs. the Network

Readiness Index. |

Some of the outlying countries are identified by name. Countries at the

bottom right of the right hand graph may be concentrating on Internet access for

all, while countries in the upper right may be focusing on excellent academic &

research networks.

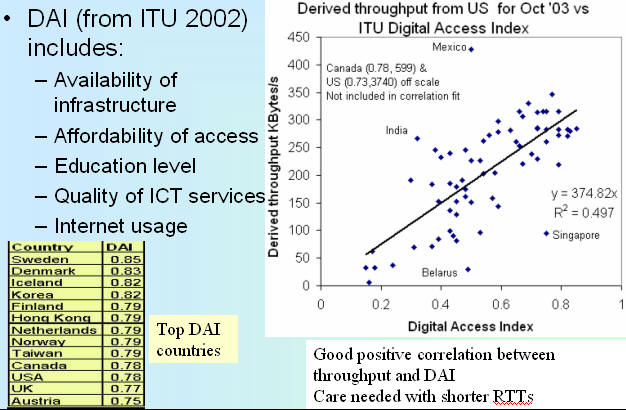

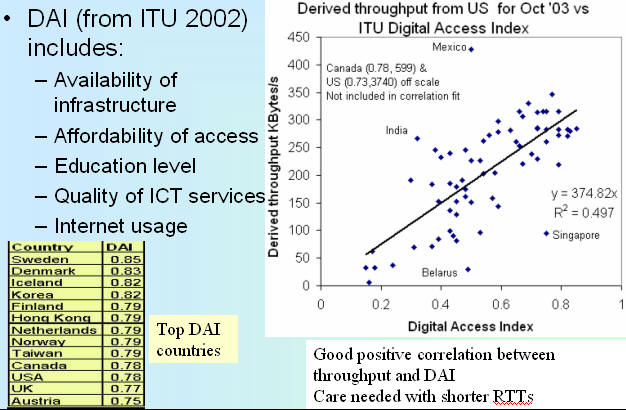

The Digital Access Index (DAI) created by the ITU combines eight variables,

covering five areas, to provide an overall country score. The areas are

availability of infrastructure, affordability of access, educational level,

quality of ICT services, and Internet usage. The results of the Index point to

potential stumbling blocks in ICT adoption and can help countries identify their

relative strengths and weaknesses.

|

|

| Figure 13: PingER derived throughput vs. the ITU Digital Access Index

for PingER countries monitored from the U.S. |

Since the PingER Derived Throughput is linearly proportional to RTT,

countries close to the U.S. (i.e. the U.S., Canada and Mexico) may be expected

to have elevated Derived Throughputs compared to their DAI. We thus do not use

the U.S. and Canada in the correlation fit, and they are also off-scale in

Figure 13. Mexico is included in the fit, however it is also seen to have an

elevated Derived Throughput. Less easy to explain is India's elevated Derived

Throughput. This maybe due to the fact that we monitor university and research

sites which may have much better connectivity than India in general. Belarus on

the other hand apparently has poorer Derived Throughput than would be expected

from its DAI. This could be an anomaly for the one host currently monitored in

Belarus and thus illustrates the need to monitor multiple sites in a developing

country.

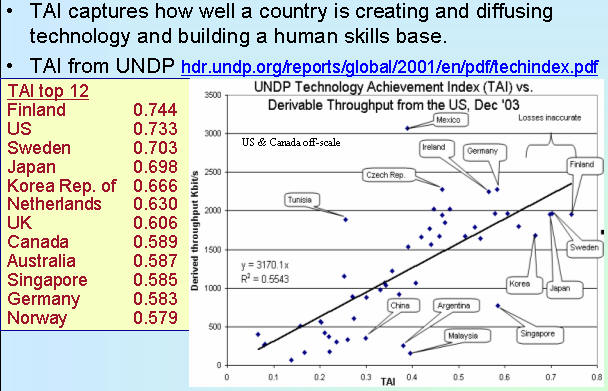

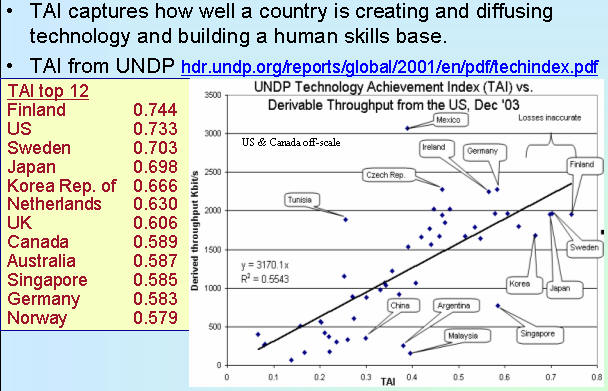

The United Nations Development Programme (UNDP) introduced the Technology

Achievement Index (TAI) to reflects a country's capacity to participate in the

technological innovations of the network age. The TAI

aims to capture how well a country is creating and diffusing technology and building

a human skill base. It includes the following dimensions: Creation of technology

(e.g. patents, royalty receipts); diffusion of recent innovations (Internet hosts/capita,

high & medium tech exports as share of all exports); Diffusion of old

innovations (log phones/capita, log of electric consumption/capita); Human

skills (mean years of schooling, gross enrollment in tertiary level in

science, math & engineering). Figure 14 shows December 2003's derived

throughput measured from the U.S. vs. the TAI. The correlation is seen to be

positive and medium to good. The US and Canada are excluded since the losses are

not accurately measureable by PingER and the RTT is small. Hosts in well

connected countries such as Finland, Sweden, Japan also have their losses poorly

measured by PingER and since they have long RTTs the derived throughput is

likely to be low using the Mathis formula and if no packets are lost then

assuming a loss of 0.5 packets in the 14,400 sent to a host in a month.

|

|

|

Figure 14: PingER derived throughputs vs. the UNDP

Technology Achievement Index (TAI) |

We have extended the measurements to cover more developing countries and to

increase the number of hosts monitored in each developing country. As a result

the number of countries monitored has increased by about 24% to over 100.

We have also spent much time working with contacts to unblock pings to their

sites (as mentioned above ~15% of hosts pingable in July 2003 were no longer pingable in December 2003). It is unclear how cost-effective this activity is. It can take many

emails to explain the situation, sometimes requiring restarting when the problem

is passed to a more technically knowledgeable person. Even then there are

several unsuccessful cases where even after many months of emails and the best

of intentions the pings remain blocked. Two specific cases are for all

university sites in Vietnam and Australia.

Even finding knowledgeable contacts, explaining what is needed and following

up to see if the recommended hosts are pingable, is quite labor intensive. More

recently we have had more success by using Google to search for university web

sites in specific TLDs. The downside is that this way we do not have any

contacts with specific people with whom we can deal in case of problems.

We worked with the eJDS/ICTP to produce a web site (http://www.ejds.org/) dedicated to PingER and

the Digital Divide. The attention of HEP to the Digital Divide was encouraged by

the presentation of a paper at CHEP03 in San Diego. We worked with the ICTP to

organize the very successful second Open Round Table on

Developing Countries Access to Scientific Knowledge: Quantifying the Digital

Divide 23-24 November Trieste, Italy, attended and presented 3 talks. As

part of this a poster was created and the proceeding were distributed. Wider

distribution of the results was made by including slides in presentations at the RSIS by Harvey Newman, Hans Hoffman and the director of ICTP.

With eJDS we produced an A3 poster for the WSIS/RSIS in Geneva. We also attended

the SIS at Palexpo, Geneva in December 2003 and gave multiple demonstrations of

"PingER: Measuring the Digital Divide" at various venues including

CHEP03, RIPE, ICTP, SLAC, BaBar, SC2003 and SIS.

We made contact with International organizations including the UN, UNESCO and

Soros foundation and made them aware of the eJDS/PingER monitoring

project. Hilda Cerdeira of ICTP is discussing with UNESCO about possible follow

up and collaboration, and with IDRC (see

http://www.idrc.ca/) we are discussing a proposal to extend the

monitoring of Africa.

Publications:

- Developing countries and the global science web, Hilda Cerdeira,

Enrique Canessa, Carlo Fonda & R. Les Cottrell, CERN Courier Vol 43, Number

10, Decxember 2003. Available at

http://www.cerncourier.com/main/article/43/10/18

- Measuring the Digital Divide with PingER, R. Les Cottrell and

Warren Matthews, Developing Countries Access to Scientific Knowledge:

Quantifying the Digital Divide, ICTP Trieste, October 2003; also

SLAC-PUB-10186.

- PingER History and Methodology R. Les Cottrell and Connie Logg,

Developing Countries Access to Scientific Knowledge: Quantifying the Digital

Divide, ICTP Trieste, October 2003; also SLAC-PUB-10187.

- Internet Performance to Africa R. Les Cottrell and Enrique Canessa,

Developing Countries Access to Scientific Knowledge: Quantifying the Digital

Divide, ICTP Trieste, October 2003; also SLAC-PUB-10188

- Monitoring the Digital Divide, E. Canessa, H. A. Cerdeira, W.

Matthews, R. L. Cottrell, SLAC-PUB-9730, CHEP 2003, San Diego, March 2003.

Presentations:

-

PingER: Measuring the Digital Divide, demonstration presented by Les

Cottrell at the SIS Exposition, Palexpo/Geneva December 2003.

-

PingER: a lightweight active end-to-end network monitoring tool/project

presented by Les Cottrell at the RIPE 46 Meeting, Amsterdam Sept. 2003.

- Internet End-to-end Performance Monitoring (IEPM) and the PingER project

(powerpoint

or

PDF). Presented by Warren Matthews at

the National

Collaboratory Middleware and Network

-

Digital Divide and PingER, presented by Les Cottrell at the ICFA/SCIC

meeting, July 2003. Also available in

ppt.

-

Quantifying the Digital Divide, presentation by Les Cottrell to the

ICFA-SCIC group, May 2003

-

Quantifying the Digital Divide, presented by Warren Matthews at the

Internet2 Spring Members Meeting, Arlington, VA, April 2003, at the Hard to

Network Places BOF.

PPT

Internet performance is improving each year with losses typically improving

by 40-50% per year and RTTs by 10-20% and, for some regions such as S. E. Europe,

even more. Geosynchronous satellite connections are still important to countries

with poor telecommunications infrastructure or in remote land-locked regions. In general for HEP countries

satellite links are being replaced with land-line links with improved

performance (in particular for RTT).

Links between the more developed regions including Anglo America, Japan and

Europe are much better than elsewhere (5 - 10 times more throughput achievable).

Regions such as S. E. Europe, Russia and Central Asia appear to be catching up

with developed regions such as Europe. At the present rate of progress S. E.

Europe should catch up in a couple of years and Russia, Central Asia and the

Caucasus in the next five to seven years. China, the Middle East and Latin

America are improving at a similar rate to the more developed regions. However,

India and Africa are further behind and India in particular appears to be

falling further behind. Though there is less extensive data, similar results are

seen in measurements made from Europe. Countries/regions with particularly bad

connections include the the Caucasus, India, and Africa. There has been a

dramatic improvement in the Internet performance for most of the world's

connected population in the last 2 years.

There is a positive correlation

between the various economic and development indices. Besides being useful in

their own right these indices are an excellent way to illustrate anomalies and

for pointing out measurement/analysis problems. The large variations between

sites within a given country illustrate the need for careful checking of the

results and the need for multiple sites/country to identify anomalies. PingER

has increased its coverage by over 25% (in number of countries) in the last year

despite increasing prevalence of ping blocking. As a result a larger fraction (~

90%) of the world's online connected population is now represented by the PingER

data.

The ICFA-SCIC "Digital Divide" report will dwell in more detail on many of

the issues of the performance differences for the developed and less

well-developed countries.

The monitoring covers most countries with formal HEP programs appearing in

the "Pocket Diary for Physicists". The monitoring should be extended to include

>=2 hosts in each Developing Country

with a formal HEP program.

There is also interest from ICFA, ICTP and others to extend

the monitoring further to countries with no formal HEP programs, but where there

are needs to understand the Internet connectivity performance in order to aid

the development of science. Africa is a region with many such countries.

Extend the monitoring of developing countries from Europe by increasing the

number of sites/hosts in developing countries monitored from CERN.

The collaboration with the ICTP has been very fruitful to bring in contacts

from developing nations with scientific interests. We recommend to continue

working with the ICTP/eJDS project to further publicise, and gather new entries

for, the monitoring of developing countries. Special emphasis will be done to

the incorporation of African countries and, in particular, sites in remote areas

in the monitoring.

We should ensure there are >=2 remote sites monitored in each Developing Countries. All results should continue to be made available publicly

via the web, and publicized to the HEP community and others. Typically HENP leads other sciences in its needs

and developing an understanding and solutions. The outreach from HENP to other

sciences is to be encouraged. The results should continue to be publicized more widely,

for example at the CHEPREO meeting in Rio de Janiero in February 2003.

We need assistance from ICFA and others to find sites to monitor and contacts

in the following countries:

- Latin America: Venezuela (need > 1 host/country), Costa Ricah*, Honduras, El Salvador, Belize, Panama,

Bolivia (have none)

- Vietnam*

- Belarush (need > 1)

- Africa: Burkino Faso,

Egypt, Ghana, Malawi, Nigeria, Senegal, Somalia (need > 1/country), Kenya,

Libya, Nigeria, Sudan (have none)

Depending on availability of funding:

- simplify and where possible automate the procedures to analyze and create

the summary statistical information (graphs and tables seen in the current

report) at regular intervals;

- develop automated methods to discover non-responsive hosts, make extra

tests to pin-point reasons for non-responsiveness, and report to administrator

together with contact email addresses.

Although not a recommendation per se, it would be disingenous to finish

without noting the following. SLAC & FNAL are the leaders in the PingER

project. The funding for the PingER effort has come from the DoE MICS office

since 1997, however it terminated at the end of the September 2003, since it was

being funded as research and the development is no longer regarded as a research

project. To continue

the effort at a minimum level (maintain data collection, explain needs, reopen

connections, open firewall blocks, find replacement hosts, make limited special

analyses, prepare & make presentations, respond to questions) would probably require central funding at a level of about 50% of a

Full Time Equivalent (FTE) person, plus travel. To extend the and enhance the

project (extend the code for new environment (more countries, more data

collections), fix known non-critical bugs, improve visualization, automate

reports generated by hand today, find new country site contacts, add route

histories and visualization, automate alarms, update web site for better

navigation, add more Developing Country monitoring sites/countries, improve code portability)

would take an additional 50% of an FTE (for a total of 1 FTE plus travel). Without funding, the future of PingER and reports

such as this one is unclear, and the level of effort sustained in 2003 will not

be possible in 2004. Many agencies/organizations have expressed interest (e.g

DoE, ESnet, NSF, ICFA, ICTP, IDRC, UNESCO) in this work but none can (or are

allowed to) fund it. We are currently seeking funding from the Canadian IDRC to

assist in extending the monitoring to Africa.

The following table lists the 104

countries currently (January 1st 2004) in the PingER database. Such countries contain

zero (Australian Academic hosts appear to have pings blocked to them at the

Washington GigaPoP in Seattle, the only host in Costa Rica that we used to

monitor objected, the Vietnam hosts we used to monitor now block pings, and we

are unable to find a host taht does not block pings) or more

sites that are being or have been monitored by PingER from SLAC. The number in

the columns to the right of the country name are the number of hosts monitored

in that country. The number cell is colored red for zero hosts, yellow for one

host for the country and green for 2 or more hosts for the country. The 45

countries marked in orange are developing countries for which we only monitor

one site in the country.

[Advisor]

http://dast.nlanr.net/Projects/Advisor/

[africa] Mike Jensen, "African Internet

Connectivity". Available http://www3.sn.apc.org/africa/afrmain.htm

[africa-rtm] Enrique Canessa,

"Real time network monitoring in Africa -

A proposal - (Quantifying the Digital; Divide)". Available

http://www.ictp.trieste.it/~ejds/seminars2002/Enrique_Canessa/index.html

[bbcp] Andrew Hanushevsky, Artem Trunov, and Les Cottrell, "P2P Data Copy

Program bbcp", CHEP01, Beijing 2002. Available at

http://www.slac.stanford.edu/~abh/CHEP2001/p2p_bbcp.htm

[bbftp] "Bbftp".

Available http://doc.in2p3.fr/bbftp/.

[bullot] "TCP Stacks Testbed", Hadrien Bullot and R. Les Cottrell. Avialble at

http://www-iepm.slac.stanford.edu/bw/tcp-eval/

[coccetti] "TCP STacks on Production Links", Fabrizzio Coccetti and R. Les

Cottrell. Available at

http://www-iepm.slac.stanford.edu/monitoring/bulk/tcpstacks/

[E2Epi] http://e2epi.internet2.edu/

[ejds-email] Hilda Cerdeira and the

eJDS Team, ICTP/TWAS Donation Programme, "Internet Monitoring of Universities

and Research Centers in Developing Countries". Available

http://www.slac.stanford.edu/xorg/icfa/icfa-net-paper-dec02/ejds-email.txt

[ejds-africa] "Internet Performance to Africa" R. Les Cottrell and Enrique

Canessa, Developing Countries Access to Scientific Knowledge: Quantifying the

Digital Divide, ICTP Trieste, October 2003; also SLAC-PUB-10188. Available

http://www.ejds.org/meeting2003/ictp/papers/Cottrell-Canessa.pdf

[ejds-pinger] "PingER History and Methodology", R. Les Cottrell, Connie Logg and

Jerrod Williams. Developing Countries Access to Scientific Knowledge:

Quantifying the Digital Divide, ICTP Trieste, October 2003; also SLAC-PUB-10187.

Available

http://www.ejds.org/meeting2003/ictp/papers/Cottrell-Logg.pdf

[EMA] http://monalisa.cern.ch/EMA/

[floyd] S. Floyd, "HighSpeed TCP for Large Congestion Windows", Internet

draft draft-floyd-tcp-highspeed-01.txt, work in progress, 2002. Available

http://www.icir.org/floyd/hstcp.html

[gridftp] "The GridFTP Protocol

Protocol and Software". Available

http://www.globus.org/datagrid/gridftp.html

[host-req] "Requirements for WAN Hosts being Monitored", Les Cottrell and Tom

Glanzman. Available at

http://www.slac.stanford.edu/comp/net/wan-req.html

[icfa-98] "May 1998 Report of the ICFA NTF Monitoring Working Group".

Available http://www.slac.stanford.edu/xorg/icfa/ntf/

[icfa-mar02]

"ICFA/SCIC meeting at CERN in March 2002". Available

http://www.slac.stanford.edu/grp/scs/trip/cottrell-icfa-mar02.html

[icfa-jan03] "January 2003 Report of the ICFA-SCIC Monitoring Working Group".

Available http://www.slac.stanford.edu/xorg/icfa/icfa-net-paper-dec02/

[iepm]

"Internet End-to-end Performance Monitoring - Bandwidth to the World Project".

Available http://www-iepm.slac.stanford.edu/bw

[ictp] Developing

Country Access to On-Line Scientific Publishing: Sustainable Alternatives,

Round Table meeting held at ICTP Trieste, Oct 2002. Available

http://www.ictp.trieste.it/~ejds/seminars2002/program.html

[ictp-jensen]

Mike Jensen, "Connectivity

Mapping in Africa", presentation at the ICTP Round Table on Developing

Country Access to On-Line Scientific Publishing: Sustainable Alternatives at

ITCP, Trieste, October 2002. Available

http://www.ictp.trieste.it/~ejds/seminars2002/Mike_Jensen/jensen-full.ppt

[ictp-rec] RECOMMDENDATIONS

OF the Round Table held in Trieste to help bridge the digital divide.

Available

http://www.ictp.trieste.it/ejournals/meeting2002/Recommen_Trieste.pdf

[kuzmanovic] "HSTCP-LP: A Protocol for Low-Priority Bulk Data Transfer in

High-Speed High-RTT Networks", Alexander Kuzmanovic, Edward Knightly and R. Les

Cottrell. Available at

http://dsd.lbl.gov/DIDC/PFLDnet2004/papers/Kuzmanovic.pdf

[low] S. Low, "Duality model of TCP/AQM + Stabilized Vegas". Available

http://netlab.caltech.edu/FAST/meetings/2002july/fast020702.ppt

[mathis] M.

Mathis, J. Semke, J. Mahdavi, T. Ott, "The Macroscopic

Behavior of the TCP Congestion Avoidance Algorithm",Computer

Communication Review, volume 27, number 3, pp. 67-82, July 1997

[MonALISA]

http://monalisa.cacr.caltech.edu/

[NDT]

http://miranda.ctd.anl.gov:7123/

[NMWG] http://www-didc.lbl.gov/NMWG/

[nua] NUA

Internet Surveys, "How many

Online". Available http://www.nua.ie/surveys/how_many_online/

[os] "TCP

Tuning Guide". Available http://www-didc.lbl.gov/TCP-tuning/

[pinger]

"PingER". Available http://www-iepm.slac.stanford.edu/pinger/;

W. Matthews and R. L. Cottrell, "The PingER Project: Active Internet Performance

Monitoring for the HENP Community", IEEE Communications Magazine Vol. 38 No. 5

pp 130-136, May 2002.

[pinger-deploy] "PingER Deployment". Available http://www.slac.stanford.edu/comp/net/wan-mon/deploy.html

[PiPES] http://e2epi.internet2.edu/

[qbss] "SLAC QBSS Measurements". Available

http://www-iepm.slac.stanford.edu/monitoring/qbss/measure.html

[ravot] J. P.

Martin-Flatin and S. Ravot, "TCP Congestion Control in Fast Long-Distance

Networks", Technical Report CALT-68-2398, California Institute of Technology,

July 2002. Available

http://netlab.caltech.edu/FAST/publications/caltech-tr-68-2398.pdf

[tsunami]

"Tsunami". Available

http://ncne.nlanr.net/training/techs/2002/0728/presentations/pptfiles/200207-wallace1.ppt

[tutorial] R. L. Cottrell, "Tutorial on Internet Monitoring & PingER at

SLAC". Available http://www.slac.stanford.edu/comp/net/wan-mon/tutorial.html

[un]

"United Nations Population Division World Population Prospects Population

database". Available http://esa.un.org/unpp/definition.html

1. In special cases, there is an option to reduce the network impact to ~

10bits/s per monitor-remote host pair.

2. Since North America officially includes Mexico, we follow the Encyclopedia

Britannica recommendation and use the terminology Anglo America (US + Canada)

and Latin America. Unfortunately many of the figures use the term N. Amerrica

for what should be Anglo America.

h. These countries appear in the Particle

Data Group diary and so would appear to have HENP programs.

*. These

countries are no longer monitored, usually the host no longer exists, or pings

are blocked.