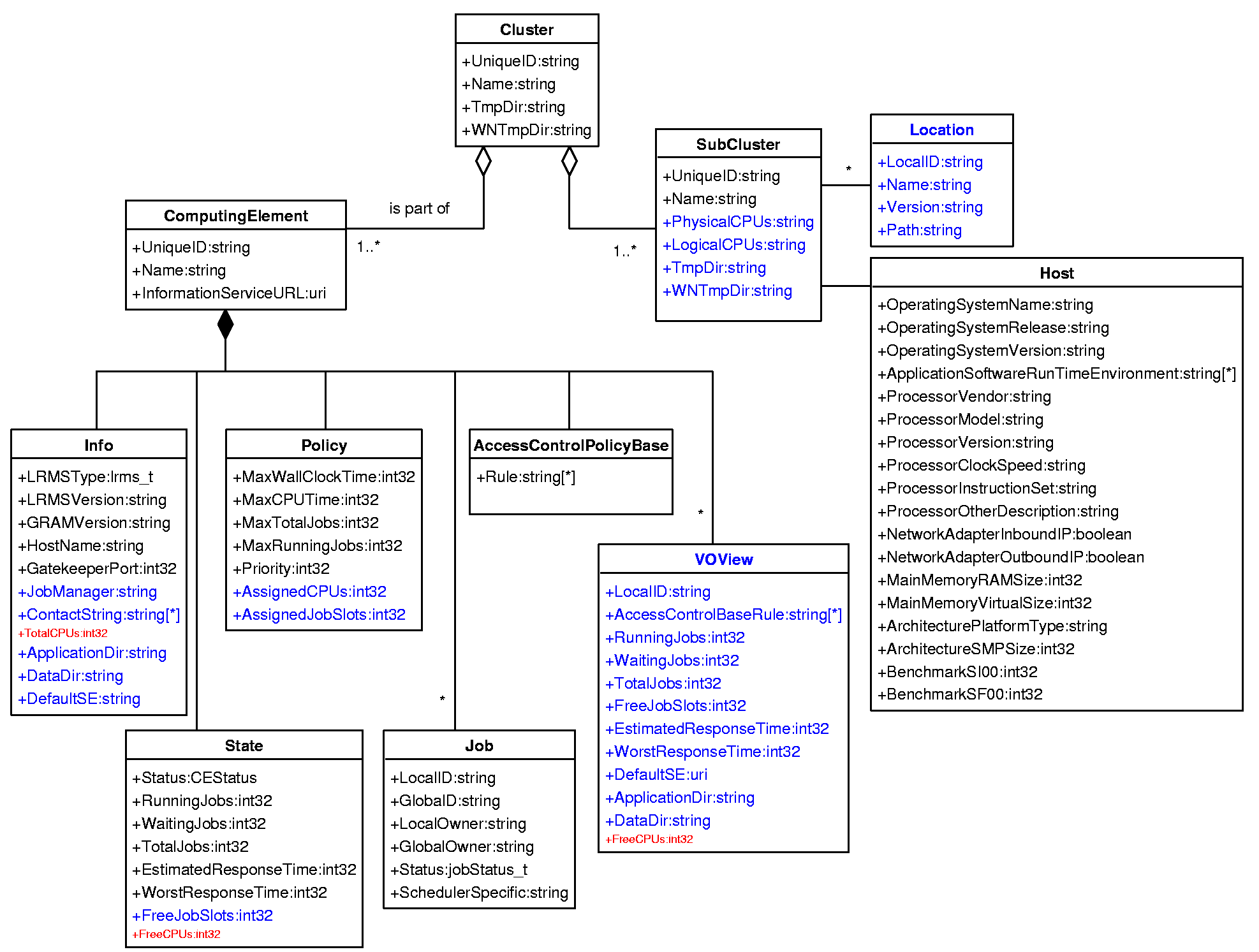

- At a 'global' scale, we can barely afford to choose the batch system

queue where to submit ==>

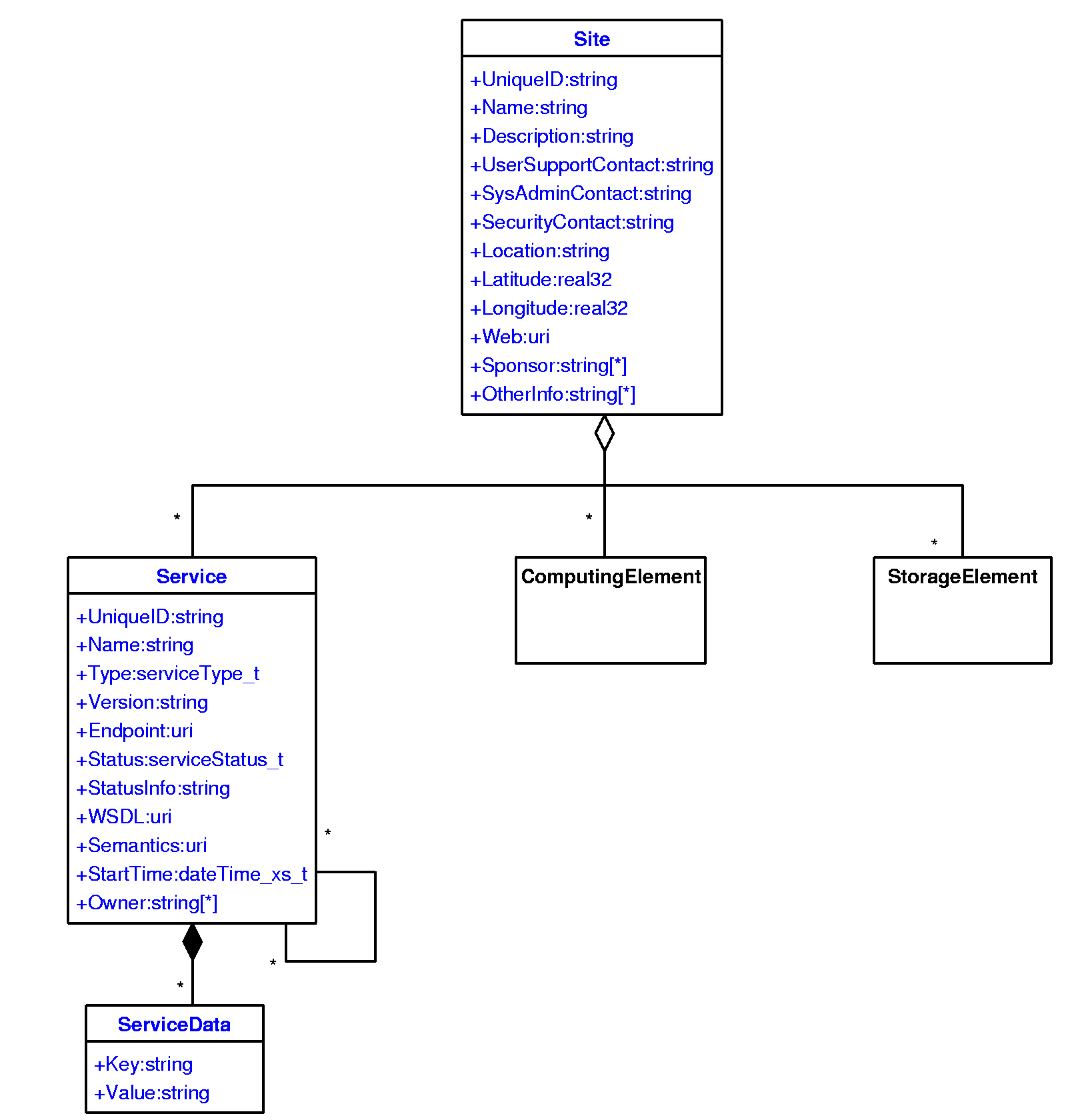

- The schema is flattened, in particular the host characteristics,

that need to represent some form of minimum common denominator of the

hosts in the queue.

[

id = "atlfarm006.mi.infn.it:2119/blah-pbs-long";

update_time = 1128351882;

expiry_time = 300;

info =

[

GlueCEInfoGatekeeperPort = 2119;

GlueCEStateStatus = "Production";

GlueSubClusterUniqueID = "atlfarm006.mi.infn.it";

PurchasedBy = "ism_cemon_asynch_purchaser";

GlueCESEBindGroupSEUniqueID =

{

"gridftp05.cern.ch"

};

GlueCESEBindGroupCEUniqueID =

"atlfarm006.mi.infn.it:2119/blah-pbs-long";

GlueHostArchitectureSMPSize = 2;

GlueHostMainMemoryVirtualSize = 2002;

GlobusResourceContactString =

"atlfarm006.mi.infn.it:2119/blah-pbs";

TTLCEinfo = 300;

GlueHostOperatingSystemVersion = "3.0.3";

QueueName = "infinite";

GlueCEStateFreeCPUs = 1;

GlueCEInfoTotalCPUs = 1;

LRMSType = "pbs";

GlueHostProcessorModel = "PIII";

GlueCEStateWaitingJobs = 0;

GlueSubClusterName = "atlfarm006.mi.infn.it";

GlueCEPolicyMaxWallClockTime = 172800;

GlueCESEBindSEUniqueID = "gridftp05.cern.ch";

GlueCEStateTotalJobs = 0;

GlueHostOperatingSystemRelease = "3.0.3";

GlueCEPolicyMaxCPUTime = 172800;

CEid = "atlfarm006.mi.infn.it:2119/blah-pbs-long";

GlueHostProcessorVendor = "intel";

GlueHostMainMemoryRAMSize = 1001;

AuthorizationCheck =

member(other.CertificateSubject,GlueCEAccessControlBaseRule) ||

member(strcat("VO:",other.VirtualOrganisation),

GlueCEAccessControlBaseRule);

CloseStorageElements =

{

[

mount = "/mn/SE2";

name = gridftp05.cern.ch

]

};

GlueCEStateRunningJobs = 0;

GlueCEHostingCluster = "atlfarm006.mi.infn.it";

GlueHostBenchmarkSI00 = 400;

GlueClusterUniqueID = "atlfarm006.mi.infn.it";

GlueForeignKey =

{

"GlueClusterUniqueID=atlfarm006.mi.infn.it",

"GlueCEUniqueID=atlfarm006.mi.infn.it:2119/blah-pbs-long"

};

GlueCEInfoLRMSType = "pbs";

GlueCEInfoHostName = "atlfarm006.mi.infn.it";

GlueHostOperatingSystemName = "SLC";

GlueCEStateEstimatedResponseTime = 0;

GlueChunkKey =

{

"GlueClusterUniqueID=atlfarm006.mi.infn.it"

};

GlueClusterService =

{

"atlfarm006.mi.infn.it"

};

CloseOutputSECheck = IsUndefined(other.OutputSE) ||

member(other.OutputSE,GlueCESEBindGroupSEUniqueID);

GlueHostApplicationSoftwareRunTimeEnvironment =

{

"GLITE_1_2",

"APP1",

"APP2",

"APP3",

"APP4",

"APP5"

};

GlueCEInfoLRMSVersion = "Torque_1.0";

GlueClusterName = "atlfarm006.mi.infn.it";

GlueHostNetworkAdapterOutboundIP = true;

GlueHostProcessorClockSpeed = 1000;

GlueCEAccessControlBaseRule =

{

"VO:EGEE"

};

GlueCEPolicyMaxRunningJobs = 99999;

GlueHostBenchmarkSF00 = 380;

GlueCEName = "infinite";

GlueHostNetworkAdapterInboundIP = false;

GlueCEStateWorstResponseTime = 0;

GlueCEUniqueID = "atlfarm006.mi.infn.it:2119/blah-pbs-long";

GlueInformationServiceURL =

{

undefined,

undefined,

undefined

};

GlueCEPolicyMaxTotalJobs = 999999;

GlueCEPolicyPriority = 1

]

]